Introduction to Attention Mechanisms

Attention mechanisms have revolutionized natural language processing (NLP) and other AI domains. They allow models to focus on relevant parts of input data, improving performance in tasks like translation, text generation, and image captioning.

The transformer architecture, introduced in 2017, popularized attention mechanisms. Its core component, Multi-head Attention (MHA), enables models to process input sequences in parallel, capturing diverse relationships. MHA has powered large language models (LLMs) like BERT, GPT, and T5, but it faces challenges with memory efficiency.

The DeepSeek-V2 paper proposes Multi-head Latent Attention (MLA) as a solution to these issues. MLA optimizes MHA by compressing key-value (KV) states, reducing memory usage and accelerating inference. This article explores MHA and MLA in depth, compares their mechanisms, explains why MLA is superior, and demonstrates applications in programming, legal, healthcare, and finance domains.

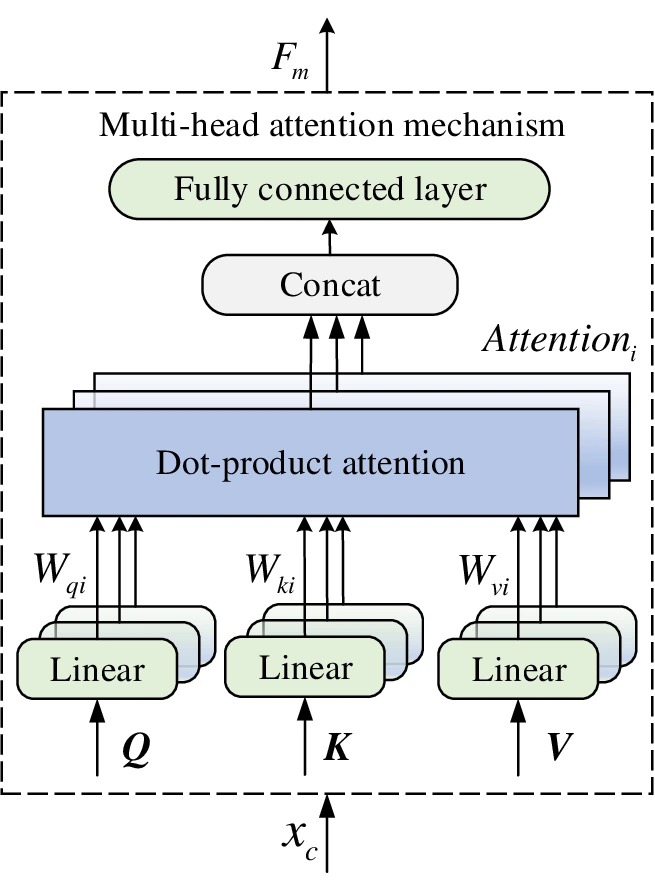

Multi-head Attention (MHA) Explained

MHA is a mechanism that allows transformers to attend to multiple parts of an input sequence simultaneously. It splits the attention process into multiple “heads,” each focusing on different aspects of the data.

In MHA, the input is represented as queries (Q), keys (K), and values (V), typically derived from the input embeddings. Each head computes attention scores using scaled dot-product attention, defined as:

\[ \text{Attention}(Q, K, V) = \text{softmax}\left(\frac{QK^T}{\sqrt{d_k}}\right)V \]

Here, \(d_k\) is the dimension of the keys, and the scaling factor \(\sqrt{d_k}\) prevents large values in the dot product.

Each head processes a subset of the input dimensions, and the outputs are concatenated and linearly transformed. This parallelism allows MHA to capture diverse relationships, such as syntactic and semantic dependencies in text.

MHA stores full-dimensional KV states in a KV cache, which grows with sequence length. For long sequences or large models, this cache becomes a memory bottleneck, slowing inference and limiting scalability.

Challenges of MHA

The KV cache in MHA is memory-intensive. For a model with hidden dimension \(d_{\text{model}}\), sequence length (n), and batch size (b), the KV cache size is proportional to \(2 \cdot b \cdot n \cdot d_{\text{model}}\).

In practice, this can require gigabytes of memory for long sequences, making MHA impractical for resource-constrained devices or applications requiring extended contexts, like document summarization.

Additionally, MHA’s positional encodings, often integrated into KV states, require recomputation for dynamic sequences. This adds computational overhead during inference, further impacting efficiency.

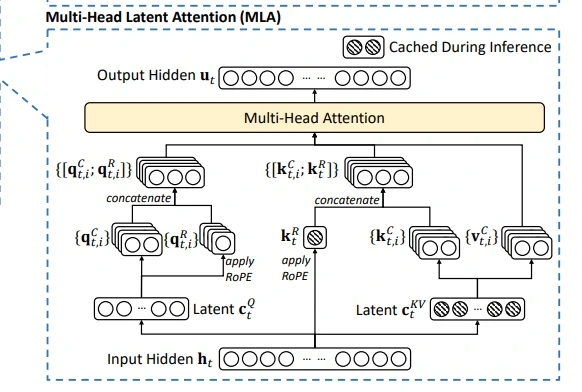

Multi-head Latent Attention (MLA) Introduced

MLA, proposed by DeepSeek, is an advanced attention mechanism that addresses MHA’s memory and computational inefficiencies. It compresses KV states into a low-dimensional latent space using low-rank factorization.

In MLA, keys and values are projected into a latent space with dimension \(r\) (where \(r \ll d_{\text{model}})\) using a down-projection matrix. Only these compressed states are cached, significantly reducing memory usage.

During attention computation, an up-projection matrix restores the latent states to the original dimension, preserving expressiveness. This process trades some computational overhead for substantial memory savings.

MLA also incorporates decoupled Rotary Position Embeddings (RoPE), which separate positional encoding from compressed dimensions. This avoids recomputation during inference, enhancing efficiency.

Mathematical Foundations of MLA

To understand MLA’s efficiency, consider its mathematical formulation. In MHA, the KV states are full-dimensional matrices: \(K, V \in \mathbb{R}^{n \times d_{\text{model}}}\).

In MLA, keys and values are compressed using a down-projection matrix \(W_{\text{down}} \in \mathbb{R}^{d_{\text{model}} \times r}\):

\[ K_{\text{latent}} = K W_{\text{down}}, \quad V_{\text{latent}} = V W_{\text{down}} \]

Here, \(K_{\text{latent}}, V_{\text{latent}} \in \mathbb{R}^{n \times r}\), and \(r\) is the latent rank (e.g., 64 or 128, much smaller than \(d_{\text{model}})\).

The KV cache stores \(K_{\text{latent}}\) and \(V_{\text{latent}}\), reducing memory usage from \(O(n \cdot d_{\text{model}})\) to \(O(n \cdot r)\).

During attention, up-projection matrices \(W_{\text{up}}^K, W_{\text{up}}^V \in \mathbb{R}^{r \times d_{\text{model}}}\) reconstruct the keys and values:

\[ K = K_{\text{latent}} W_{\text{up}}^K, \quad V = V_{\text{latent}} W_{\text{up}}^V \]

The attention computation then proceeds as in MHA, using the reconstructed \(K\) and \(V\).

Decoupled RoPE applies positional encodings only to non-compressed dimensions, avoiding interference with the latent space. This ensures stable performance across sequence lengths.

image from : DeepSeek-V2: A Strong, Economical, and Efficient Mixture-of-Experts Language Model

Key Differences Between MHA and MLA

The differences between MHA and MLA are critical to understanding their trade-offs:

- KV Cache Size:

- MHA: Stores full-dimensional KV states, requiring \(O(n \cdot d_{\text{model}})\) memory.

- MLA: Stores compressed latent states, requiring \(O(n \cdot r)\) memory, where \(r \ll d_{\text{model}}\).

- Positional Encoding:

- MHA: Integrates positional encodings into KV states, necessitating recomputation for dynamic sequences.

- MLA: Uses decoupled RoPE, applying encodings only to non-compressed dimensions, avoiding recomputation.

- Computational Overhead:

- MHA: Straightforward computation but memory-intensive.

- MLA: Adds down- and up-projection steps, increasing computation but reducing memory usage.

- Scalability:

- MHA: Limited by memory constraints for long sequences or large models.

- MLA: Scales better due to reduced memory requirements, supporting longer contexts and larger models.

Why MLA is Better Than MHA

MLA offers significant advantages over MHA, making it a game-changer for LLMs:

- Memory Efficiency: MLA reduces the KV cache size by up to 10x, as reported in DeepSeek-V2. For a model with \(d_{\text{model}} = 4096\) and \(r = 128\), the memory savings are substantial, enabling deployment on devices with limited RAM.

- Faster Inference: A smaller KV cache accelerates attention computations. DeepSeek-V2 reports up to 6x faster inference compared to MHA-based models, critical for real-time applications like chatbots.

- Scalability: MLA’s reduced memory footprint allows LLMs to process longer sequences (e.g., 128k tokens) or scale to larger models without requiring high-end hardware. This democratizes access to advanced AI.

- Preserved Expressiveness: The up-projection matrix ensures MLA retains MHA’s representational power. Empirical results from DeepSeek-V2 show comparable or better performance on benchmarks like MMLU.

- Edge Deployment: MLA’s efficiency makes it feasible to run LLMs on edge devices, such as smartphones or IoT systems, expanding AI’s reach.

- Cost Efficiency: Lower memory and computational requirements reduce operational costs for cloud-based AI services, benefiting providers and users.

These advantages position MLA as a superior choice for modern AI applications, particularly where resource constraints are a concern.

Technical Workflow of MLA

MLA’s workflow can be broken down into clear steps:

- Input Embedding: The input sequence is embedded into a high-dimensional space, producing a matrix \(X \in \mathbb{R}^{n \times d_{\text{model}}}\).

- Query Projection: Queries are computed as \(Q = X W_Q\), where \(W_Q \in \mathbb{R}^{d_{\text{model}} \times d_{\text{model}}}\).

- KV Compression: Keys and values are compressed into the latent space: \(K_{\text{latent}} = X W_{\text{down}}\), \(V_{\text{latent}} = X W_{\text{down}}\).

- Caching: The compressed \(K_{\text{latent}}\) and \(V_{\text{latent}}\) are stored in the KV cache.

- Up-Projection: During attention, keys and values are reconstructed: \(K = K_{\text{latent}} W_{\text{up}}^K\), \(V = V_{\text{latent}} W_{\text{up}}^V\).

- Attention Computation: Scaled dot-product attention is applied: \(\text{Attention}(Q, K, V) = \text{softmax}\left(\frac{QK^T}{\sqrt{d_k}}\right)V\).

- Output Projection: The attention output is reshaped and projected to produce the final output.

This workflow minimizes memory usage while maintaining computational fidelity, making MLA highly efficient.

Programming Implementation

Implementing MLA requires modifying the attention mechanism in a transformer model. Below is a detailed Python implementation using PyTorch, inspired by the mla-experiments repository:

import torch

import torch.nn as nn

class MLAttention(nn.Module):

def __init__(self, d_model, num_heads, kv_lora_rank):

super(MLAttention, self).__init__()

self.d_model = d_model

self.num_heads = num_heads

self.kv_lora_rank = kv_lora_rank

self.head_dim = d_model // num_heads

# Low-rank projection matrices

self.W_down_kv = nn.Linear(d_model, kv_lora_rank, bias=False)

self.W_up_k = nn.Linear(kv_lora_rank, d_model, bias=False)

self.W_up_v = nn.Linear(kv_lora_rank, d_model, bias=False)

self.W_q = nn.Linear(d_model, d_model, bias=False)

self.W_o = nn.Linear(d_model, d_model, bias=False)

def forward(self, x):

batch_size, seq_len, _ = x.size()

# Query projection

q = self.W_q(x).view(batch_size, seq_len, self.num_heads, self.head_dim).transpose(1, 2)

# Low-rank KV compression

kv_latent = self.W_down_kv(x)

k = self.W_up_k(kv_latent).view(batch_size, seq_len, self.num_heads, self.head_dim).transpose(1, 2)

v = self.W_up_v(kv_latent).view(batch_size, seq_len, self.num_heads, self.head_dim).transpose(1, 2)

# Scaled dot-product attention

scores = torch.matmul(q, k.transpose(-2, -1)) / (self.head_dim ** 0.5)

attn_weights = torch.softmax(scores, dim=-1)

out = torch.matmul(attn_weights, v)

# Reshape and output projection

out = out.transpose(1, 2).contiguous().view(batch_size, seq_len, self.d_model)

out = self.W_o(out)

return out

# Example usage

d_model = 512

num_heads = 8

kv_lora_rank = 64

model = MLAttention(d_model, num_heads, kv_lora_rank)

input_tensor = torch.randn(32, 128, d_model) # Batch size: 32, Sequence length: 128

output = model(input_tensor)This implementation includes:

- Low-rank Projections: The W_down_kv, W_up_k, and W_up_v matrices handle KV compression and reconstruction.

- Multi-head Attention: The queries, keys, and values are split into heads for parallel processing.

- Output Projection: A final linear layer \(W_o\) ensures compatibility with the transformer architecture.

For a practical example, consider integrating MLA into a transformer model. Below is a simplified transformer layer incorporating MLA:

class TransformerLayer(nn.Module):

def __init__(self, d_model, num_heads, kv_lora_rank, d_ff, dropout=0.1):

super(TransformerLayer, self).__init__()

self.attention = MLAttention(d_model, num_heads, kv_lora_rank)

self.norm1 = nn.LayerNorm(d_model)

self.ffn = nn.Sequential(

nn.Linear(d_model, d_ff),

nn.ReLU(),

nn.Dropout(dropout),

nn.Linear(d_ff, d_model)

)

self.norm2 = nn.LayerNorm(d_model)

self.dropout = nn.Dropout(dropout)

def forward(self, x):

# Attention with residual connection

attn_output = self.attention(x)

x = self.norm1(x + self.dropout(attn_output))

# Feed-forward with residual connection

ffn_output = self.ffn(x)

x = self.norm2(x + self.dropout(ffn_output))

return x

# Example usage

d_ff = 2048

layer = TransformerLayer(d_model=512, num_heads=8, kv_lora_rank=64, d_ff=d_ff)

input_tensor = torch.randn(32, 128, 512)

output = layer(input_tensor)This code demonstrates how MLA can be embedded in a transformer layer, suitable for tasks like text generation or classification.

For complete implementations, refer to the mla-experiments and TransMLA repositories. The TransMLA notebook (qwen_transMLA_rope.ipynb) provides a step-by-step guide to converting Grouped Query Attention (GQA) models to MLA.

Citations:

- GitHub – ambisinister/mla-experiments: Experiments on Multi-Head Latent Attention.

- GitHub – fxmeng/TransMLA: TransMLA: Multi-Head Latent Attention Is All You Need.

Applications Across Domains

MLA’s efficiency makes it a versatile tool for LLMs in multiple domains. Below, we explore its applications in legal, healthcare, and finance sectors, with detailed examples.

Legal Field

The legal industry increasingly relies on AI to streamline repetitive tasks. MLA’s efficiency enhances LLMs for legal applications by enabling faster processing and longer context handling.

- Contract Analysis: LLMs with MLA can analyze complex contracts, extracting key clauses (e.g., termination conditions) and identifying risks (e.g., ambiguous terms). MLA’s reduced memory usage allows processing of lengthy documents without excessive hardware demands.

- Legal Research: Lawyers use AI to retrieve relevant case law and statutes. MLA’s ability to handle long contexts ensures comprehensive searches across large legal databases, improving accuracy and speed.

- Predictive Analytics: MLA-powered models analyze historical case data to predict litigation outcomes. For example, they can estimate the likelihood of winning a patent dispute, aiding strategic planning.

AI’s impact on law is well-documented. A 2025 article highlights how AI automates document review, freeing lawyers for high-value tasks. MLA’s optimizations make these tools more accessible to smaller firms.

Citation: How AI is transforming the legal profession (2025).

Healthcare

Healthcare benefits from AI’s ability to process vast datasets. MLA’s efficiency supports LLMs in medical applications, particularly where real-time processing is critical.

- Medical Record Analysis: MLA enables LLMs to process electronic health records (EHRs), identifying trends (e.g., recurring symptoms) or flagging anomalies (e.g., abnormal lab results). Its low memory footprint supports deployment on hospital servers.

- Drug Discovery: Analyzing chemical compound datasets is computationally intensive. MLA’s speed accelerates the identification of drug candidates, reducing time-to-market for new treatments.

- Clinical Decision Support: Real-time analysis of patient data (e.g., vital signs, medical history) supports treatment recommendations. MLA’s fast inference ensures timely decision-making in critical care settings.

For example, an LLM with MLA could process a patient’s EHR to suggest a diagnosis of sepsis based on subtle patterns, improving outcomes.

Finance

The financial industry utilizes artificial intelligence to enhance fraud detection, evaluate risks, and improve customer support.

- Fraud Detection: MLA-powered LLMs analyze transaction histories to identify suspicious patterns, such as unusual spending. Its speed enables real-time monitoring, minimizing financial losses.

- Risk Assessment: By processing market data and financial reports, MLA supports risk prediction for investments. Its memory efficiency allows analysis of extensive datasets, improving accuracy.

- Customer Service: Financial chatbots handle complex queries (e.g., loan eligibility). MLA’s low latency ensures responsive interactions, enhancing user satisfaction.

For instance, a bank could use an MLA-optimized LLM to detect fraudulent credit card transactions within milliseconds, protecting customers.

Visual Illustrations

Diagrams are essential for explaining MLA’s complex concepts. Below are recommended images, their descriptions, and sources, ensuring ethical use with proper citations.

- KV Cache Bottleneck Diagram:

- Description: A diagram comparing MHA’s full-dimensional KV cache with MLA’s compressed latent cache. It shows memory usage (e.g., gigabytes vs. megabytes) for a sequence length of 10,000 tokens.

- Source: A Visual Walkthrough of DeepSeek’s Multi-Head Latent Attention (MLA).

- Usage: Include this to illustrate MLA’s memory efficiency. Cite the source and obtain permission if required.

- MLA Process Flowchart:

- Description: A flowchart detailing MLA’s workflow, including down-projection, caching, up-projection, and attention computation. Arrows show data flow, with annotations for matrix dimensions.

- Source: DeepSeek-V3 Explained 1: Multi-head Latent Attention (Figure 6).

- Usage: Use this to explain MLA’s technical steps. Cite the article and ensure compliance with usage rights.

- MHA vs. MLA Comparison:

- Description: A side-by-side diagram of MHA and MLA, highlighting differences in KV cache size, positional encoding, and computation steps. Color-coded elements (e.g., red for MHA’s large cache, green for MLA’s small cache) enhance clarity.

- Source: Understanding Attention Mechanisms Using Multi-Head Attention.

- Usage: Adapt this MHA diagram to include MLA, or use it as a base for comparison. Cite the source and modify ethically.

- RoPE Decoupling Diagram:

- Description: A diagram showing how decoupled RoPE applies positional encodings to non-compressed dimensions in MLA, contrasted with MHA’s integrated approach.

- Source: DeepSeek-V3 Explained 1: Multi-head Latent Attention (Figure 5).

- Usage: Include this to clarify MLA’s positional encoding advantage. Cite the source and verify usage permissions.

Practical Considerations for MLA

Implementing MLA requires careful consideration of hyperparameters, such as the latent rank \(r\). A smaller \(r\) reduces memory usage but may compromise expressiveness, while a larger \(r\) balances efficiency and performance.

Training MLA models involves optimizing the projection matrices \((W_{\text{down}}\), \(W_{\text{up}}^K\), \(W_{\text{up}}^V)\). Techniques like LoRA (Low-Rank Adaptation) can fine-tune these matrices efficiently, as explored in the mla-experiments repository.

Inference with MLA benefits from hardware acceleration (e.g., GPUs, TPUs). Frameworks like PyTorch and JAX support MLA’s matrix operations, ensuring compatibility with existing transformer pipelines.

For deployment, MLA’s efficiency enables edge computing. For example, a legal firm could deploy an MLA-optimized LLM on a local server to analyze contracts, avoiding cloud costs.

Limitations and Future Directions

While MLA is a significant advancement, it has limitations:

- Computational Overhead: The projection steps add computation, which may offset memory savings in small models.

- Hyperparameter Sensitivity: The latent rank \(r\) requires careful tuning to balance efficiency and performance.

- Domain-Specific Fine-Tuning: MLA’s benefits may vary across domains, requiring tailored implementations.

Future research could explore adaptive latent ranks, where \(r\) adjusts dynamically based on input complexity. Combining MLA with other optimizations, like sparse attention, could further enhance efficiency.

Conclusion

Multi-head Latent Attention (MLA) is a transformative advancement over Multi-head Attention (MHA), offering unparalleled memory efficiency, faster inference, and enhanced scalability. By compressing KV states and optimizing positional encodings, MLA enables LLMs to process longer contexts and scale to larger models with fewer resources. The provided programming examples demonstrate MLA’s implementation, while applications in legal, healthcare, and finance domains highlight its versatility. Diagrams, such as those from DeepSeek-V3 Explained, can clarify MLA’s concepts for readers. As AI continues to evolve, MLA’s innovations pave the way for more efficient, accessible, and impactful systems across industries.

see also in our Category: DeepSeek R1

1 thought on “Understanding Multi-head Latent Attention (MLA): Implementation and Applications”